Is the innovator's dilemma outdated?

What's a platform and what's a product? Plus, what's a fad and what's fundamental? And finally, what?

For years, a bad venture capitalist’s idea of a good question was, “How would your startup survive if Google1 decides to build the same thing you’re building?”

It sounds like a smart concern: Rather than being a simple question about what your product does or if people will want to buy it, it is about market dynamics, second-order effects, and competitive moats. It is about ecosystems, and economics, and two-by-two grids full of little logos. And to give a satisfying answer, you had to say some aesthetically clever thing, about data flywheels and network effects and the architectural implications of being mobile or cloud or blockchain or AI native.

But it was a midwit question, because Google wasn’t going to build your product. Your niche service—a CRM for private equity investors who are rolling up regional car wash franchises; an observability tool to monitor engineers’ level of frustration, and profanity, when prompting a vibe-coding bot;2 a non-discriminatory texting app to say hi to your bros—doesn’t matter to Google. To build your product, Google has to decide to build your product, and the only products Google wants to build are ones that can materially effect an incomprehensibly big income statement. It is not worth it, to Google, to reallocate budgets and create teams and develop roadmaps and generally disrupt the operational machinery of their very large businesses and very ambitious product bets to build something small and specialized.3 So they mostly don’t do that, until your startup becomes something big enough to attract their attention—and then they’re just as likely to buy it as they are to build it.

Or, to paraphrase the infamous innovator’s dilemma, big firms don’t like small markets:

Incumbent firms are likely to lag in the development of technologies—even those in which the technology involved is intrinsically simple—that only address customers' needs in emerging value networks. Disruptive innovations are complex because their value and application are uncertain, according to the criteria used by incumbent firms.

Of course, Google’s dilemma isn’t quite that small markets or niche products don’t matter at all; lots of small markets can add up to something big. The problem is that each market has some fixed overhead. For example, if you want to build a robot to help technical analysts make sense of business data,4 you have to talk to a bunch of analytics teams about their troubles; you have to do competitive research; you have to develop your own sense of expertise and intuition about where you think the market might go and what future customers might want. And if Google is going to do this, and take all of these chances, the product that this process produces can’t disappear into a rounding error.

Therefore, Google isn’t building a dedicated product to help analysts write code.5 So who is? Well!

When I search for “AI analyst,” that’s the first sponsored result that Google returns to me. But if you click on any of the links,6 you aren't sent to some dedicated analytics bot; you are sent to https://claude.ai/new, which starts a generic chat session with Claude.

Nothing about the landing page is specifically designed for data analysis.7 Nothing about Claude is specifically designed for data analysis. Instead, Anthropic is advertising that their very general chatbot can be used for a very specific thing. Or, put differently, they are launching a new product with just an ad.

Actually, no. They are launching dozens of new products with ads. A brand strategist; a copywriter; a marketing roadmap designer; a JSON formatting utility; a market research analyst—Claude launched all of these products and services with ads, each of which sends you to the same undecorated URL for the same utility chatbot.

Which is weird! Or at least, very different! It used to be that, to build something, a company had to do it intentionally. This opened up a lot of space for new products, because, as small companies grew bigger, they stopped chasing small things. Shrubs will choke out the grass, but tall trees do not.

Now, when AI labs update their models or chat apps, products accidentally fall out. For example: Both WriteSonic and Jasper initially launched themselves as tools for creating marketing content. WriteSonic (then ChatSonic) “surpasses the limitations of ChatGPT to give you factually correct results” by integrating “with Google Search to create content with the latest information.” And Jasper was a wrapper around GPT-3, and plugged some of ChatGPT’s early holes with models taught to be better marketers. According to Jasper’s CEO, their “language models—trained on 10% of the web and fine-tuned for ‘customer specificity’—set it apart.”

Then the foundational models became better marketers—not because OpenAI or Anthropic decided that they especially cared about creating marketing content, but because the models became generically “smarter:” They could write better, search more, and more effectively reason their way through anything, including how to write corporate blog posts, on their own. The marketing sense that WriteSonic and Jasper wrapped around ChatGPT was eventually baked into ChatGPT itself. And now, WriteSonic pivoted into becoming an AI SEO analyst, while Jasper is no longer a writing product, but a “full stack AI platform,” with a no-code app builder, an AI context layer,8 and hundreds of integrations into tools like Word, Google Docs, and Google Sheets.

Claude can now create and edit Excel spreadsheets, documents, PowerPoint slide decks, and PDFs directly in Claude.ai and the desktop app. This transforms how you work with Claude—instead of only receiving text responses or in-app artifacts, you can describe what you need, upload relevant data, and get ready-to-use files in return.

That URL points to the exact same place as all of Anthropic’s ads: An empty chat session with Claude. One new feature; a hundred products, incidentally subsumed.

A while back, I said that OpenAI might become another AWS:

The more lucrative opportunity for OpenAI, it seems, is to sit behind the apps that are fighting for our attention. In that scenario, whoever wins, so does OpenAI[9].

Though it’s possible to be both AWS and the iPhone, that’s a very tall order. As Steve Yegge suggested in his famous memo about Google and Amazon, you can be a great platform or a great product.

But maybe that is changing. If more and more software starts to look like a smart robot and a spreadsheet, the platform and the product are effectively the same thing. In an AI marketer—or an AI analyst, or an AI engineer—where’s the line between the application and the foundational infrastructure? Are clever prompts, manual reasoning loops, and context engineering features of the app, or the model? How will your startup survive if Anthropic accidentally builds the same thing you’re building?

The fundamentals

Do your parents know what’s cool?

Do they know what people like? Do they know who’s popular on the internet? Do they know which influencers are drawing crowds, starting riots, building cults? Do they know how kids talk? Do they understand the words they use? The emojis? The memes?

If you asked them to post on Twitter for you, would you trust them to do it? On Instagram? On LinkedIn, even? If you asked them to represent your personality on the internet—your brand, your style, your voice, your sense of humor, you, for all intents and purposes, since who you are on the internet is perhaps a more canonical version of yourself than who you are off it—would you let them?

Do they understand how today’s society works? Do they understand the traffic of modern commerce? Do they know what TikTok Shop is? Do they know that it's bigger than Macy's? Do they know what people shop for, and why they buy what they buy? Do they know who tells them what to buy? Do they know which stores they like? Can they find the stores; can they even comprehend of their metaphysical existence?

Do they know what’s trending? Do they know what’s cringe? What’s chopped? Do they know how tall LaMelo Ball is?

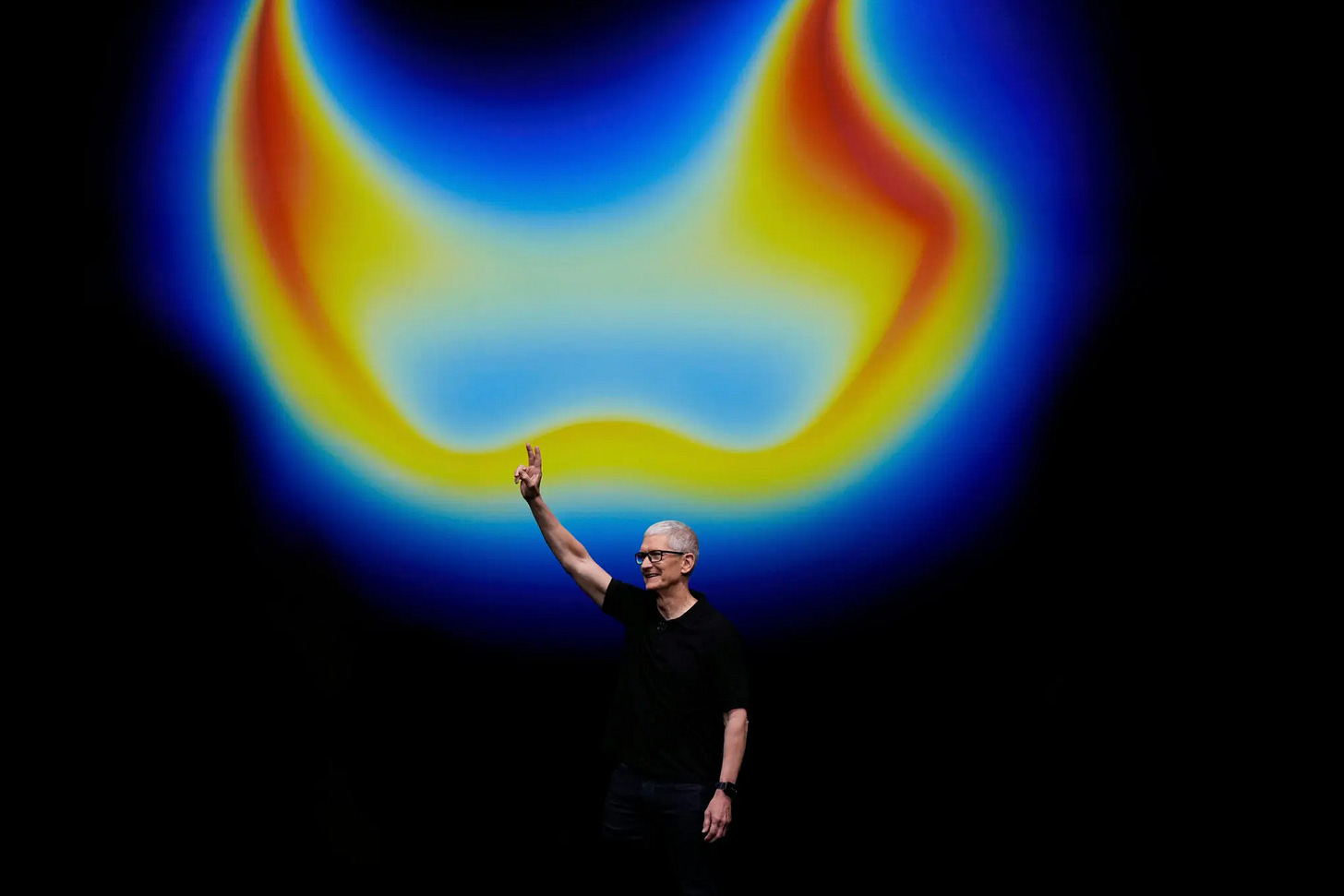

Anyway, earlier this week, Apple launched several new products, including the next generation iPhone, and Ben Thompson thought something seemed missing:

What was shocking to me, however, was actually watching the event in real time: my group chats and X feed acknowledged that the event was happening, but I had the distinct impression that almost no one was paying much attention, which was not at all the case a decade ago.

Thompson offered a commercial explanation: People care about AI now, not phones and hardware. Apple’s AI efforts have been haltingly inconsistent, and “why should anyone who cares about AI—which is to say basically everyone else in the industry—care about square camera sensors or vapor chambers?”

That may be true, but you have to wonder if it misses something larger: That Apple—and Thompson, and me, and probably many of you—have an outdated understanding of how the world works. It may be that nobody was talking about Apple’s big launch event because Apple isn’t doing interesting things with AI, but it also may be because big launch events have no cultural traction. They, like us, are part of a shrinking conversational artery. Apple’s playbook is stale; its vocabulary is stale; its entire economic model of demand generation is stale. Apple’s launch events are losing their grip over Silicon Valley because everything has to be a meme or a controversy now, and polite launch events don’t produce memes or controversy.9

It is easy to see when other people are the unc. They are from an earlier generation; a different time. For a while, when you’re living in the main channel, the world confirms your instincts: Their weird anachronisms are disappearing, and your vocabulary is ascendant.

But then we get stuck. And it is much harder to let go of our own sense of cosmology—because our beliefs don’t feel like fads, but fundamentals. Apple could make memes, sure, but tight messaging is fundamental. Polish and quality is fundamental. A well-executed launch is fundamental. The innovator’s dilemma is fundamental. The old generations believed in discredited science; our beliefs are settled science; the new generations are chasing pseudoscience.

But you see the problem, right?

MSNBC becomes BI

You could have two theories about this recent opinion piece in the Financial Times:10

President Donald Trump thinks the habit of forcing companies to report earnings at three-monthly intervals is “Not good!!!”. He is probably right. But since he last complained about quarterly reporting in 2018, the debate has moved on. The alternative will soon be not less disclosure, but much more.

…

Between businesses shifting their data to the cloud and artificial intelligence creating new ways to slice and dice it, it should one day be possible to see how companies are performing in close to real time. Tech groups such as Palantir, Databricks and Snowflake already help companies turn disparate records into crunchable digital data for executives to use and peruse. AI agents can summon information, package it and add gloss as well as any silver-tongued chief executive.

Company bosses will blanch at the idea of radical financial transparency. After all, it amounts to handing over some control of how corporate facts are presented. Real-time reporting would also reduce the value of financial window-dressing — arranging cash flows to make the quarterly snapshot more attractive — to zero. Still, some nerves could be allayed by keeping a one-month lag, or by presenting data on a three-month average basis.

Such innovations may be a while away, because AI still makes mistakes and thus requires human review. …

Nonetheless, AI is advancing faster than most executives and investors thought possible a couple of years ago. And as the technology makes real-time data and analysis easier and faster to generate, it is naive to think that companies can get away with offering investors less information than they do now. Odds are that reporting financial performance at three-month intervals will indeed become a thing of the past, but not for the reasons Trump has proposed.

The first theory is: What?

Google doesn’t publish live dashboards of how many people are using Google because Wall Street analysts can’t write a SQL query, or because they can’t compute a three-month average in Excel without ChatGPT; they don’t publish it because they don’t want to! And when they do want to, they do publish it! Famously so! What does AI have to do with any of this!

More generously, the second theory is: It’s not the game the company cares about; it’s the broadcast:

Though [data] exists in an approximately raw form, most analysis comes with some sort of narrative. Corporate analysts caption their presentations to call out what’s most important. The Wall Street Journal delivers quantitative news in paragraphs of prose. Just as we’re given selective views of a basketball game [during a broadcast] , data consumers—executives, “business users,” the people who ask analysts questions—are typically given curated cuts of data, with its own sidebar of color commentary. …

But those words—even seemingly innocuous ones, the sort that most people would still describe as objective—are inevitably manipulative. As I’m writing this, the top headline on the Wall Street Journal is about Google. That article opens with what appears to be basic reporting:

—

Google’s earnings power is holding up well, even as the internet giant spends record sums on artificial intelligence in the midst of global economic turbulence.Parent company reported operating income of $30.6 billion for the first quarter on Thursday—solidly beating Wall Street’s forecast of $28.7 billion.

—

Holding up? Holding up well? Solidly beating forecasts? Is a $2 billion beat solid? Expected? Record-setting? I don’t know. But I assume it must be solid, because that’s what it says. Had that line said “barely beating Wall Street’s forecast of $28.7 billion,” my perspective on Google would be entirely different, even if the numbers were the same.

That’s why AI could change how companies report data—not because it can write queries for Wall Street analysts, but because it can influence Wall Street analysts. If companies put public chatbots on top of their corporate databases, it won’t be for radical transparency, or because they haven’t had the technology to “crunch digital data” without it. Instead, they’ll do it because that chatbot will be told that it should use the right optimistic adjectives, that it should keep to the company line about the company’s recent performance, and that it should not look quiet and downbeat when it’s asked if the CEO is having an affair.

Everything becomes BI, but BI becomes the corporate spokesperson.

Over or under: Someone gets fired for threatening an AI by January 1, 2027. (And when it happens, how does that get politically coded? Who’s on which side in that case?)

Google’s balance sheet says its figures are displayed “in millions,” and there are no decimals. Google makes $28 billion in profit every quarter. Google makes more than $300 million in profit every day. Google’s daily profit is bigger than the lifetime revenue of your startup; it is bigger than your startup’s paper valuation; it is bigger than your total addressable market.

But Macro—built by several of Mode’s best!—is! The reviews from its users—relayed to me by a Macro employee, but still!—are that it “looks like the best thing for analysis I've ever seen!” Check it out! (But also, you know.)

They have a conversational chatbot inside of Looker and a Gemini integration inside of BigQuery, but neither are a specialized service for data analysts like Julius (or Macro!).

If you click on the image above, it’ll pass you through the same interstitial that Google sends you through.

It’s a little surprising to me that they don’t have some mechanic that updates the placeholder text in the chat box that says “How can I help you today?” with something like “What data can I help you analyze?”

Of note: That Gap ad and the Apple launch event have almost exactly the same number of views on YouTube. But the Gap ad blew up on TikTok—and then, in the zeitgeist.

Shout to Camille Fournier for this very excellent find.

Regarding the first part - I'm quite used to hearing that question. But usually it's not Google or similar, rather a big player in your market than can, actually, decide to build your product. Take Solid for example - it's very likely Snowflake and/or Databricks will build (or buy) a competing product. So, it's a legitimate question. The issue I have with that question is that my answer is obvious - they already know the answer when they ask it.

So why ask it?

Secondly, the fact that the foundational models are expanding into everyone's territory is a really interesting dynamic. Been watching it for the past two years as new models keep coming out. The conclusion - build a product that gets much better as the models get better. Don't fix gaps in those models because the gaps will likely close.

I always loved the question "How would your startup survive if Google decides to build the same thing you're building?" and would always fire back with "What's your fund going to do if a16z starts investing in early stage?" Well, that's exactly what happened and Andreessen and Sequoia went and started doing seed rounds))))

The whole point of disruptive innovation is creating new markets when existing ones have massive barriers or have turned into red oceans. Porter's still relevant here despite what Christensen said. Kahneman wrote about how tough it is to get people onto something new because your product has to push something else out of their heads and wallets.

Usually VC reckon if a product has no market and no competitors (or at least substitutes), it's rubbish. But we're dealing with a completely new paradigm here, creating fresh demand patterns because nobody was using AI for flirting or pitch decks before.

The problem is every market has its top 2 players and everyone else: Apple vs Samsung, BMW vs Mercedes, Uber vs Lyft, OpenAI vs Anthropic, Coke vs Pepsi. 3кв place onwards get absolutely nothing, which explains why I haven't bothered with Jasper in about 2 years.

Claude writes code alright, but try asking it to modify existing code and the whole thing goes tits up.

AI's going to replace analysts and associates on Wall Street pretty bloody soon. Consulting's already having a nightmare and McKinsey are absolutely doing their nut trying to keep up with trends by building their own internal products.

Time to grab the popcorn and watch it all unfold.